Harmonizing lipidomics

Chatting at a conference a few years ago, lipidomics researchers Markus Wenk and Andrej Shevchenko noticed a problem.

“We were having coffee over a break during the sessions, and we were thinking, ‘There are a lot of papers flying around now on plasma lipidomics,’” Wenk, of the National University of Singapore, said.

Markus Wenk Many of those studies were looking for biomarkers, molecules that change reliably during the course of a disease and might someday be used for diagnosis. To detect potential lipid biomarkers, researchers compare the level of many lipid species in blood plasma from patients to those of healthy volunteers — often without determining an absolute molar quantity of either.

Markus Wenk Many of those studies were looking for biomarkers, molecules that change reliably during the course of a disease and might someday be used for diagnosis. To detect potential lipid biomarkers, researchers compare the level of many lipid species in blood plasma from patients to those of healthy volunteers — often without determining an absolute molar quantity of either.

“The situation is very odd,” said Shevchenko, of the Max Planck Institute of Molecular Cell Biology and Genetics. “We cannot compare data … You identify significantly changing lipids, and do maybe thousands of analyses, but I cannot compare my results with yours.”

Andrej ShevchenkoNot all biological studies must be strictly quantitative. Western blots, fluorescence imaging and many proteomics approaches rely on semiquantitative measurement. Likewise, a lipidomics study might show that people with a given disease have twice as much of a certain lipid as healthy individuals. If later studies back up the finding, the molecule in question begins to look like a promising analyte to screen for the disease.

Andrej ShevchenkoNot all biological studies must be strictly quantitative. Western blots, fluorescence imaging and many proteomics approaches rely on semiquantitative measurement. Likewise, a lipidomics study might show that people with a given disease have twice as much of a certain lipid as healthy individuals. If later studies back up the finding, the molecule in question begins to look like a promising analyte to screen for the disease.

For a lipid to be useful in the clinic, however, researchers must agree on the identity of the molecule in question and on how much of it to expect in a healthy person. Lipidomics researchers are working toward such agreements; their field-wide project is mostly collegial but sometimes contentious and provides a case study of how scientists create systems of measurement.

Lipidomic beginnings

The most common lipid biomarker is cholesterol. Clinical laboratories with colorimetric readout to measure cholesterol in a patient’s blood plasma. A lab’s confidence in its measurement comes from running control samples with each assay and from .

Lipid researchers have found numerous molecules that might someday predict diseases such as Alzheimer’s, cancer and metabolic disorders as effectively as cholesterol predicts the risk of heart attack and stroke. But cholesterol is by far the most abundant lipid in the blood. To measure molecules with much lower concentrations, scientists must use methods with higher sensitivity, such as mass spectrometry, or MS.

Lipid researchers have found numerous molecules that might someday predict diseases such as Alzheimer’s, cancer and metabolic disorders as effectively as cholesterol predicts the risk of heart attack and stroke. But cholesterol is by far the most abundant lipid in the blood. To measure molecules with much lower concentrations, scientists must use methods with higher sensitivity, such as mass spectrometry, or MS.

Forty years ago, using a mass spectrometer to measure lipids seemed impossible. The technique starts by ionizing molecules to measure their mass-to-charge ratio; lipids, many of which are nonpolar, can be difficult to ionize.

Forty years ago, using a mass spectrometer to measure lipids seemed impossible. The technique starts by ionizing molecules to measure their mass-to-charge ratio; lipids, many of which are nonpolar, can be difficult to ionize.

When he started measuring lipids in the 1980s, , a mass spectrometrist at Washington University in St. Louis, would homogenize buckets of samples to harvest enough of certain subcellular membranes to detect. Though the fundamentals of mass spectrometry are the same, instrumentation has advanced a great deal since then.

Richard Gross“Nothing has changed in the last 40 years,” Gross joked, “except the ion sources have improved by five orders of magnitude, the ion traps have improved, and so has detector resolution. We’ve gone from working with garbage cans and canoe paddles to working in Eppendorfs.”

Richard Gross“Nothing has changed in the last 40 years,” Gross joked, “except the ion sources have improved by five orders of magnitude, the ion traps have improved, and so has detector resolution. We’ve gone from working with garbage cans and canoe paddles to working in Eppendorfs.”

Gross’ lab was among the first to measure lipids extracted from tissue by MS, publishing its on the work in 1984. “From my perspective, that was the beginning of lipidomics,” he said, “although lipidomics wasn’t going to be a word for 20 years.”

What took the technique so long to catch on? , former director of the Max Planck Institute of Molecular Cell Biology and Genetics, blames the molecular revolution in biology.

Kai Simons “It was DNA, RNA, proteins,” Simons said. “There was so much to do. Many of the most creative young researchers wanted to do what they saw before them — and lipids were not included.”

Kai Simons “It was DNA, RNA, proteins,” Simons said. “There was so much to do. Many of the most creative young researchers wanted to do what they saw before them — and lipids were not included.”

Nonetheless, analytical chemists continued to make technical progress. In 2003, the word “lipidomics” made its debut in the peer-reviewed literature. in quick succession pointed to a trickle of papers demonstrating robust mass spectrometric measurement of lipids and argued that, like the growing field of proteomics, high-throughput lipid measurement was poised to take off.

The legacy of LIPID MAPS

Before lipidomics even entered the lexicon, of the University of California, San Diego, says he made a case to the National Institutes of Health that the field would be a great investment. The NIH budget had doubled in the previous five years. In 2000, the National Institute for General Medical Sciences introduced a new funding mechanism called the intended to propel interdisciplinary science forward with up to 10 years of funding for projects with multiple principal investigators. Dennis thought he had just the project. Edward Dennis

Edward Dennis

“Developing an integrated metabolomics system capable of characterizing the global changes in lipid metabolites is a daunting task, but one that is important to undertake,” in his initial application.

The Lipid Metabolites and Pathways Strategy, or LIPID MAPS for short, got the grant. Its 12 PIs set out to develop rigorous laboratory and bioinformatics techniques for lipidomics with approximately $73 million from the NIGMS over 10 years.

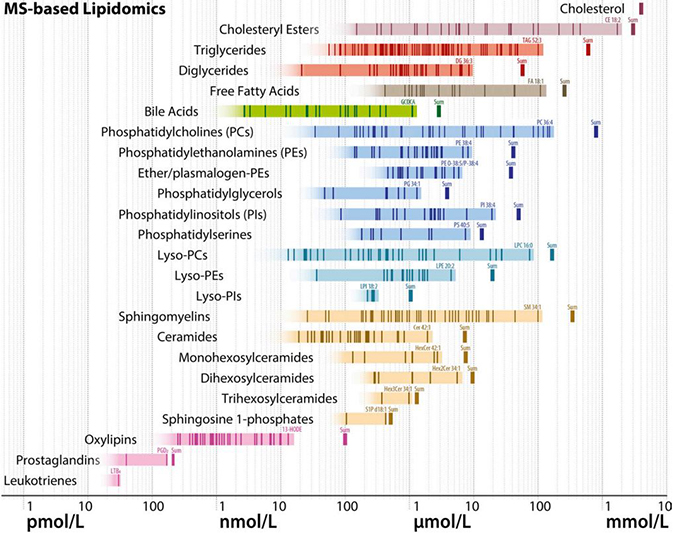

By current estimates, human blood contains millimoles of hydrophobic cholesterol but only tenths of a nanomole of the hydrophilic signaling lipids called eicosanoids: a 10 millionfold difference in molar abundance. With such chemical diversity and varied abundance, lipid measurement requires multiple approaches.

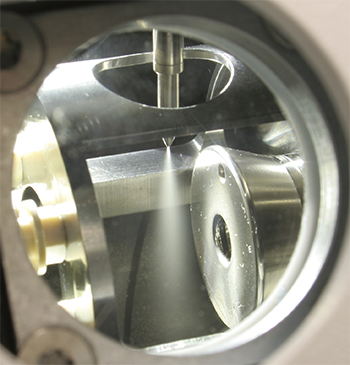

Many lipidomics studies use electrospray ionization. This photo shows the ionization source in a mass spectrometer. The sample is ionized while it’s sprayed from the needle, and gaseous ions then enter the mass detector to the right.WIKIPEDIA/THETWEAKER The LIPID MAPS consortium chose core labs specializing in different lipid classes to lead methods development for each class. of Georgia Tech led the sphingolipid core.

Many lipidomics studies use electrospray ionization. This photo shows the ionization source in a mass spectrometer. The sample is ionized while it’s sprayed from the needle, and gaseous ions then enter the mass detector to the right.WIKIPEDIA/THETWEAKER The LIPID MAPS consortium chose core labs specializing in different lipid classes to lead methods development for each class. of Georgia Tech led the sphingolipid core.

“From the very beginning, the hope was that the field was going to explode,” Merrill said. “Our goal was to do anything we could to accelerate that process, and seeing it happen would be the biggest reward for our efforts.”

The consortium developed for classifying lipid types, referring to them by standard names and drawing them consistently. The American labs worked with leading Japanese and European lipid biochemists to reach consensus on the system before publishing it. According to Dennis, this collaboration helped to clarify later conversations.

“Everyone in the world has accepted our nomenclature, our classification and our structure drawing program,” Dennis said. “There is no conference that argues about nomenclature … it’s just accepted by the worldwide community.”

Al Merrill LIPID MAPS labs used identical instruments to determine structures and quantities of thousands of lipid species and where researchers could search for features in mass spectra and match them to known structures.

Al Merrill LIPID MAPS labs used identical instruments to determine structures and quantities of thousands of lipid species and where researchers could search for features in mass spectra and match them to known structures.

After the project’s funding expired in 2013, its large-scale collaborative projects came to a halt, but most of its researchers kept working in lipidomics.

Shevchenko, who was trained in proteomics and has worked with lipids for about 20 years, believes LIPID MAPS settled on instruments and approaches a little too early in the process of global methods development.

“With all my respect to colleagues, in 2006 the field was in its infancy,” he said. “Just being able to see lipids and quantify (them) … was a big step forward. People would look at this with big respect. But that doesn’t mean the right thing to do was to standardize the approaches. Because there were no approaches to standardize. Right? There’s no way around this — just to wait and let the field develop.”

When do you standardize? I think he makes a good point,” Dennis said in reply. “(But) I don’t think LIPID MAPS attempted to set the standard protocol or ever said it did. LIPID MAPS developed the best damn protocol it could, used that approach to measure plasma, and published it.”

Quantifying a lipidome

A LIPID MAPS capstone project was to quantitate as many lipid species as possible in human plasma, using the technologies the consortium had developed. They chose a plasma sample supplied by the National Institute of Standards and Technology, or NIST, pooled from 100 volunteers, middle-aged men and women whose ethnicity matched the average U.S. population.

Standard Reference Material 1950, a pooled sample of plasma from 100 volunteers, has been the subject of numerous lipidomics studies.NATIONAL INSTITUTE FOR STANDARDS AND TECHNOLOGY NIST develops many such reference materials for calibration of clinical assays. This particular sample, called Standard Reference Material 1950, came with of cholesterol, triglycerides, free fatty acids, and several steroid hormones and lipid vitamins. Otherwise, the lipid content was largely unknown.

Standard Reference Material 1950, a pooled sample of plasma from 100 volunteers, has been the subject of numerous lipidomics studies.NATIONAL INSTITUTE FOR STANDARDS AND TECHNOLOGY NIST develops many such reference materials for calibration of clinical assays. This particular sample, called Standard Reference Material 1950, came with of cholesterol, triglycerides, free fatty acids, and several steroid hormones and lipid vitamins. Otherwise, the lipid content was largely unknown.

Each LIPID MAPS core laboratory used the quantitative techniques that it had optimized for its class of interest to extract lipids from a vial of SRM 1950, measure them and report back. The consortium measured just shy of 600 lipid species in six classes and in the Journal of Lipid Research.

In 2011, the year after the LIPID MAPS study was published, analytical chemist took a job at NIST. With the field of lipidomics expanding, he saw the post as an opportunity to contribute to standardization.

“I tried to spread the message at NIST that we needed to help standardize and harmonize the community in this type of measurement,” said Bowden, now a professor at the University of Florida. “At the time, it was not a community-wide priority.”

John Bowden Bowden and his team launched a comparison exercise in 2014, using SRM 1950 to determine the variability in lipid identification and quantitation among 30 labs. Bowden in 2017, “We (asked): ‘How much variability exists in the community right now, with all the different methodologies and philosophies for measuring lipids?’”

John Bowden Bowden and his team launched a comparison exercise in 2014, using SRM 1950 to determine the variability in lipid identification and quantitation among 30 labs. Bowden in 2017, “We (asked): ‘How much variability exists in the community right now, with all the different methodologies and philosophies for measuring lipids?’”

Each lab measured lipids in triplicate according to its usual protocols, reporting concentration estimates for anywhere from 100 to 1,500 species. , a postdoc on Bowden’s team, said the guidelines were intentionally vague. “We gave them free rein (in order) to see, if there’s no guidance provided, how do they go about assigning concentrations?”

After anonymizing , Bowden’s team calculated a consensus mean, a median of the average concentration measured at multiple labs, for each lipid species. The median of means was more robust than a weighted average to outlier values, of which there were several. Ulmer said, “Our idea with the consensus mean values was to provide concentrations that are robust and independent of the instrumentation … and the type of data processing tools you use.”

Candice Ulmer The disagreement among labs was considerable. “The problem was that (NIST) wanted to be neutral,” said Kai Simons, the former Max Planck Institute director who now runs , a company that participated in the study. “They didn’t want to provoke a critical discussion, which would have been quite devastating.”

Candice Ulmer The disagreement among labs was considerable. “The problem was that (NIST) wanted to be neutral,” said Kai Simons, the former Max Planck Institute director who now runs , a company that participated in the study. “They didn’t want to provoke a critical discussion, which would have been quite devastating.”

Reception of the study, , was as mixed as the laboratories’ estimations of lipid concentration.

, a metabolomics expert at the University of California, Davis, who participated, said that when measurements are technically challenging and protocols differ, expecting perfect concordance is unrealistic. “People always think that we should all get the same results … (but) every single ring trial shows that there’s always a distribution of values.”

Xianlin Han Fiehn called the study a success. “Is the glass half full or half empty? If (others) say it’s half empty, I’d say its half full.” , who wrote one of the 2003 lipidomics review articles, called the study a failure. “It turned out the result could be a threefold difference,” said Han, of the University of Texas Health Sciences Center at San Antonio. “So what do the data mean? Later on, if I want to study lipidomics in plasma, I can report any data, refer to this paper, and say, ‘Hey, my data are within this range.’ That’s a disaster. The outcome would be very different if the organizers had spiked a few standards into the NIST plasma sample as controls.”

Xianlin Han Fiehn called the study a success. “Is the glass half full or half empty? If (others) say it’s half empty, I’d say its half full.” , who wrote one of the 2003 lipidomics review articles, called the study a failure. “It turned out the result could be a threefold difference,” said Han, of the University of Texas Health Sciences Center at San Antonio. “So what do the data mean? Later on, if I want to study lipidomics in plasma, I can report any data, refer to this paper, and say, ‘Hey, my data are within this range.’ That’s a disaster. The outcome would be very different if the organizers had spiked a few standards into the NIST plasma sample as controls.”

For Bowden, establishing the lack of agreement on how to conduct and quantify lipidomics experiments was precisely the point. “At the time, we really did not know what the exact issues were in lipid measurement across the community,” he said.

The study helped participating laboratories spot problems in their workflows and got the community talking about measurement quality, Bowden said. “There was a thinking that everybody was doing the best we could, given the resources … but if we put enough energy into certain areas, then maybe we can actually make better lipidomics measurements.”

Identifying features

What would it take to improve lipidomics measurement?

Valerie O'Donnell, a professor at Cardiff University, specializes in analysis of phospholipids and their signaling byproducts. “Up until 12 or 15 years ago, lipid research was a relatively small field,” O’Donnell said. “Everyone knew each other; it’s always been an extremely collaborative but relatively small field of specialists who worked on individual lipid classes.”

Valerie O'Donnell, a professor at Cardiff University, specializes in analysis of phospholipids and their signaling byproducts. “Up until 12 or 15 years ago, lipid research was a relatively small field,” O’Donnell said. “Everyone knew each other; it’s always been an extremely collaborative but relatively small field of specialists who worked on individual lipid classes.”

Everything changed around 2005, O’Donnell said, when new benchtop mass spectrometers made the technology accessible to more labs. “From then on, you didn’t have to be a physicist and understand how to take apart and put back together a mass spectrometer to use it to measure lipids.”

Instrument resolution also improved, making it possible to distinguish lipid species more accurately. According to Bowden, “We’re uncovering all kinds of new lipids, and we’re able to separate out lipids you couldn’t separate out before.”

These factors have drawn more researchers to look into the lipidome. But that growth brings challenges. “With lots of new people coming into the field,” O’Donnell said, “there are emerging issues around how you identify lipids.”

The major challenge is that any one feature in an MS spectrum could represent a large number of molecular species. To be sure of a lipid’s structure, a researcher needs several dimensions of information, far more than a single MS run provides.

The major challenge is that any one feature in an MS spectrum could represent a large number of molecular species. To be sure of a lipid’s structure, a researcher needs several dimensions of information, far more than a single MS run provides.

Bowden and his NIST colleagues on reporting one’s degree of certainty in identifying a peak in a special issue of the journal Biochimica et Biophysica Acta in 2017. In the same issue, explicitly called for standardization of lipidomics data. Its authors include two scientists Bowden met during the NIST study: , an independent lipidomics consultant in Finland, and of the University of Regensburg in Germany.

Kim Ekroos Liebisch and Ekroos had come across a troubling case of peak misidentification in a in the journal Clinical Chemistry. Using standard metabolomics, the authors reported finding six lipids that were higher in healthy volunteers’ serum than in samples from people with Type 2 diabetes.

Kim Ekroos Liebisch and Ekroos had come across a troubling case of peak misidentification in a in the journal Clinical Chemistry. Using standard metabolomics, the authors reported finding six lipids that were higher in healthy volunteers’ serum than in samples from people with Type 2 diabetes.

“We question the identities of the reported biomarkers and the lack of validation thereof,” Liebisch, Ekroos and colleagues wrote in a . They pointed out that the authors had reported more information about acyl chain length and position for some lipid species than their data could reasonably provide. Moreover, some species were at unreasonable masses, suggesting they might be wrongly identified.

Gerhard LiebischThe authors of the original study they’d given adequate information under guidelines from the Metabolomics Society’s . There was, they conceded, an error in one of their annotations: a lipid species with one double bond was described incorrectly as having none, accounting for the wrong mass.

Gerhard LiebischThe authors of the original study they’d given adequate information under guidelines from the Metabolomics Society’s . There was, they conceded, an error in one of their annotations: a lipid species with one double bond was described incorrectly as having none, accounting for the wrong mass.

Liebisch said the exchange demonstrated that “lipids should be considered a special case of metabolites, where simple matching of (spectral) features is not sufficient.”

Michael Wakelam It also motivated the pair to think about problems in the field. Ekroos said, “We are starting to see quite a few errors in published data already … that we want to somehow try to clear up.”

Michael Wakelam It also motivated the pair to think about problems in the field. Ekroos said, “We are starting to see quite a few errors in published data already … that we want to somehow try to clear up.”

, director of the Babraham Institute associated with Cambridge University, has been doing lipidomics studies for more than 25 years and agrees with Ekroos. “We are seeing more and more of this,” Wakelam said of error-prone papers. “I think it’s actually a byproduct of the success of the field.”

The LIPID MAPS website, redesigned in 2018, curates MS data on lipids and collects information about methods and tools.

The LIPID MAPS website, redesigned in 2018, curates MS data on lipids and collects information about methods and tools.

LIPID MAPS returns

By 2016, it was clear to many experts that lipidomics researchers needed help with standardization and reproducibility. Today, three overlapping groups are working on the problem. One has begun work to revitalize LIPID MAPS as a resource for researchers in the field, a second has focused on technical problems in plasma lipidomics, and a third wants to reform publishing and reporting standards for all lipidomics data.

The first team, led by O’Donnell and Wakelam in the U.K., has spearheaded the continuation of LIPID MAPS. When NIH funding ended in 2013, lead bioinformatician , a University of California, San Diego, professor, landed a small bridge grant to keep the website online.

“They were at the point where they were thinking that it may have to get shut down,” O’Donnell said. “I felt that was really bad, because we use this (tool) all the time, and we couldn’t do our work without it.”

Wakelam, Dennis, Subramaniam and O’Donnell applied to the U.K.-based Wellcome Trust to revitalize the database. The project migrated to the U.K., and curation of lipid species and MS data resumed on a .

“LIPID MAPS wants to play a big role in signposting our lipid biochemistry colleagues to what’s out there for big data analysis,” O’Donnell said. “Previously, it was a research project … but at the moment it’s really (about) the database and having it available as a global online free open access resource.”

This group photo was taken at a 2017 forum on harmonizing plasma lipidomics held in Singapore. After they noticed confusion in the field, Markus Wenk (third from right) and Andrej Shevchenko (fifth from left) invited fellow lipidomics researchers to meet and hash out a path toward reference values for lipids in the blood.MATEJ ORESIC

This group photo was taken at a 2017 forum on harmonizing plasma lipidomics held in Singapore. After they noticed confusion in the field, Markus Wenk (third from right) and Andrej Shevchenko (fifth from left) invited fellow lipidomics researchers to meet and hash out a path toward reference values for lipids in the blood.MATEJ ORESIC

The Singapore workshop

At the , where Wenk and Shevchenko shared impressions over that cup of coffee, the two also gave the opening and closing keynotes; they discussed finding, respectively, quantitative variations within a healthy individual’s plasma lipidome and further variability among individuals according to age, ethnicity, sex and prescription medications.

Both thought groundwork was needed before biomarker discovery studies went forward. “Rather than study disease or complications, one needs to first actually get an idea of healthy baselines,” Wenk said.

That means finding out which lipids are stable in healthy adults and which ones vary by the hour — and determining how labs might reliably measure each of those. Wenk and Shevchenko knew that if the goal was consensus on these points, they needed other scientists to buy in.

“If you publish a small article and say, ‘Hey guys, we are in a position to teach you how to do lipidomics,’ the field will not respond,” Shevchenko said. “We have to be united.”

Wenk agreed. “I don’t think you can tell scientists what they have to do. You need consensus. We’ve been very careful about this.”

Shevchenko and Wenk reached out to established PIs working on plasma lipidomics. Dennis, Ekroos, Han, Liebisch, Wakelam and 13 others responded, and in April 2017, the two hosted a two-day meeting in Singapore. Members of the group specialized in various lipid chemistries using a variety of instruments and platforms. Their mission: figure out how to harmonize studies of the plasma lipidome.

“Of course, we all do it a little differently,” Dennis said. “Nobody said, ‘Oh, you do it right, Mr. X, we’ll all just use your protocol,’ because everybody’s invested in different approaches.”

The workshop hadn’t been going on long when spoke up. Loh, a consulting physician in the laboratory medicine department at Singapore’s National University Hospital, was a rare bird among the panelists, a clinician in a room full of basic scientists.

“We’re all doing analytical chemistry,” Shevchenko said. “To get grants, we used to call (our work) biomarker or diagnostic discovery. But we don’t do diagnosis. And then Tze Ping, the guy who actually does diagnosis … explained to us what a diagnostic is actually and how far we are from (that). He really hit us in the head.”

Loh explained the practice in laboratory medicine of , the range of concentrations of a molecule measured in a chosen population when all labs agree to measure in the same way.

Loh explained the practice in laboratory medicine of , the range of concentrations of a molecule measured in a chosen population when all labs agree to measure in the same way.

To define a reference interval is to come to a consensus on what an analyte is, how to measure it accurately and reproducibly, in which populations it should be measured, and the statistical procedures to calculate it.

The cholesterol test is a good example. In its report, a clinical lab notes how the patient’s total cholesterol levels compare to . That gives a robust health indicator that any doctor or nurse can interpret.

A schematic diagram shows estimated molar quantities of various lipid classes in human plasma, measured by mass spectrometry. Each vertical line represents an individual lipid species, and horizontal bands of color represent range estimates. Lipidomics researchers are trying to narrow down these estimates and make sure they match ranges known from clinical chemistry.BURLA ET AL. / JLR 2019

A schematic diagram shows estimated molar quantities of various lipid classes in human plasma, measured by mass spectrometry. Each vertical line represents an individual lipid species, and horizontal bands of color represent range estimates. Lipidomics researchers are trying to narrow down these estimates and make sure they match ranges known from clinical chemistry.BURLA ET AL. / JLR 2019

Loh’s interjection helped move the conversation from debate over the merits of different technical approaches to comparing measurements among these platforms, Shevchenko said. He attributes the productive conversation in part to Wenk.

“This is Markus’ talent. He’s a guy who has a vision to organize people without insulting them. So he got us together, with (our) different platforms, different approaches, different backgrounds,” Shevchenko said.

Tze Ping Loh Galvanized by that first day, the workshop’s attendees started to lay out a plan for harmonization, beginning with broad-strokes guidelines for lipidomics research.

Tze Ping Loh Galvanized by that first day, the workshop’s attendees started to lay out a plan for harmonization, beginning with broad-strokes guidelines for lipidomics research.

Balancing biases and adjudicating conflicts among research camps can derail attempts to standardize. in the Journal of Lipid Research that came out of the Singapore working group took no stance on most technical questions. Instead, the authors stressed the importance of using internal standards, determining molar concentrations of each analyte and working toward evidence-based agreements on other technicalities.

At a follow-up meeting in November, researchers were invited to help select the lipid species most amenable to clinical development. “When you come to all this granularity — they’re all scientists, right?” Wenk said. “By paring it to a single task, my hope is that it will be easier to come to a general best solution to that particular problem.”

The group settled on ceramides and bile acids because these two classes of molecule have well-defined roles in disease and are thought to be abundant, durable and easy to isolate. The research community can now work toward defining a method for measuring these specific lipids and determining their range in healthy individuals, Wenk said.

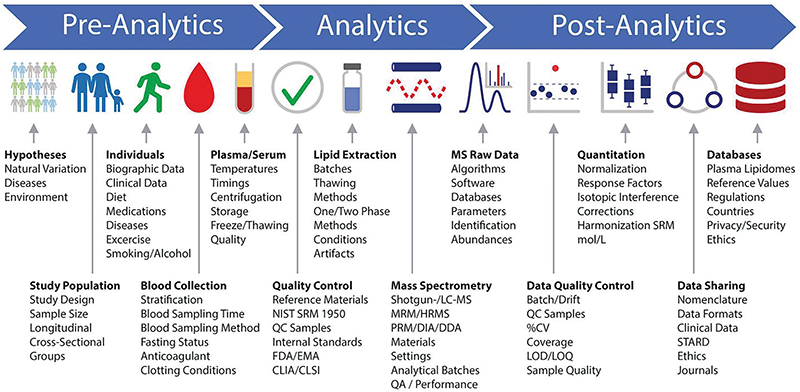

A lipidomics workflow schematic developed by participants at the Singapore lipidomics forum shows many points where a researcher’s decisions can introduce variability in a lipidomics experiment — even before a sample is loaded into the mass spectrometer. For example, many labs store and handle blood samples on ice to slow biochemica reactions and delay lipid oxidation. But that chilling may activate platelets, which then release bioactive lipids. Research groups interested in measuring those particular lipids reproducibly may handle samples at room temperature, perplexing colleagues from other labs.BURLA ET AL. / JLR 2019

A lipidomics workflow schematic developed by participants at the Singapore lipidomics forum shows many points where a researcher’s decisions can introduce variability in a lipidomics experiment — even before a sample is loaded into the mass spectrometer. For example, many labs store and handle blood samples on ice to slow biochemica reactions and delay lipid oxidation. But that chilling may activate platelets, which then release bioactive lipids. Research groups interested in measuring those particular lipids reproducibly may handle samples at room temperature, perplexing colleagues from other labs.BURLA ET AL. / JLR 2019

The standards initiative

Ekroos and Liebisch, the researchers who took biomarker hunters to task in Clinical Chemistry, participated in the Singapore meeting but found it too narrow in sco